Table of contents

No headings in the article.

If you enjoy this topic, you will probably like my articles, tweets, and stuff. If you're wondering, check out my YouTube channel and don't forget to subscribe and follow since I'm offering programming and motivating tools and information to help you achieve your dreams.

Streams are a powerful feature in Node.js that allows developers to efficiently handle large amounts of data by reading and writing data in chunks. This approach is particularly useful when dealing with large files or data sets, as it allows for more efficient handling of data.

One of the main advantages of using streams is that they are memory-efficient. Instead of loading an entire file or data set into memory at once, streams read and write data in smaller chunks. This means that you can work with large files or data sets without having to worry about running out of memory.

Another advantage of using streams is that they allow for real-time processing of data. For example, you can use streams to process data as it is being uploaded to a server, rather than having to wait for the entire file to be uploaded before processing it. This is particularly useful for video and audio streaming, as it allows for smooth, seamless playback.

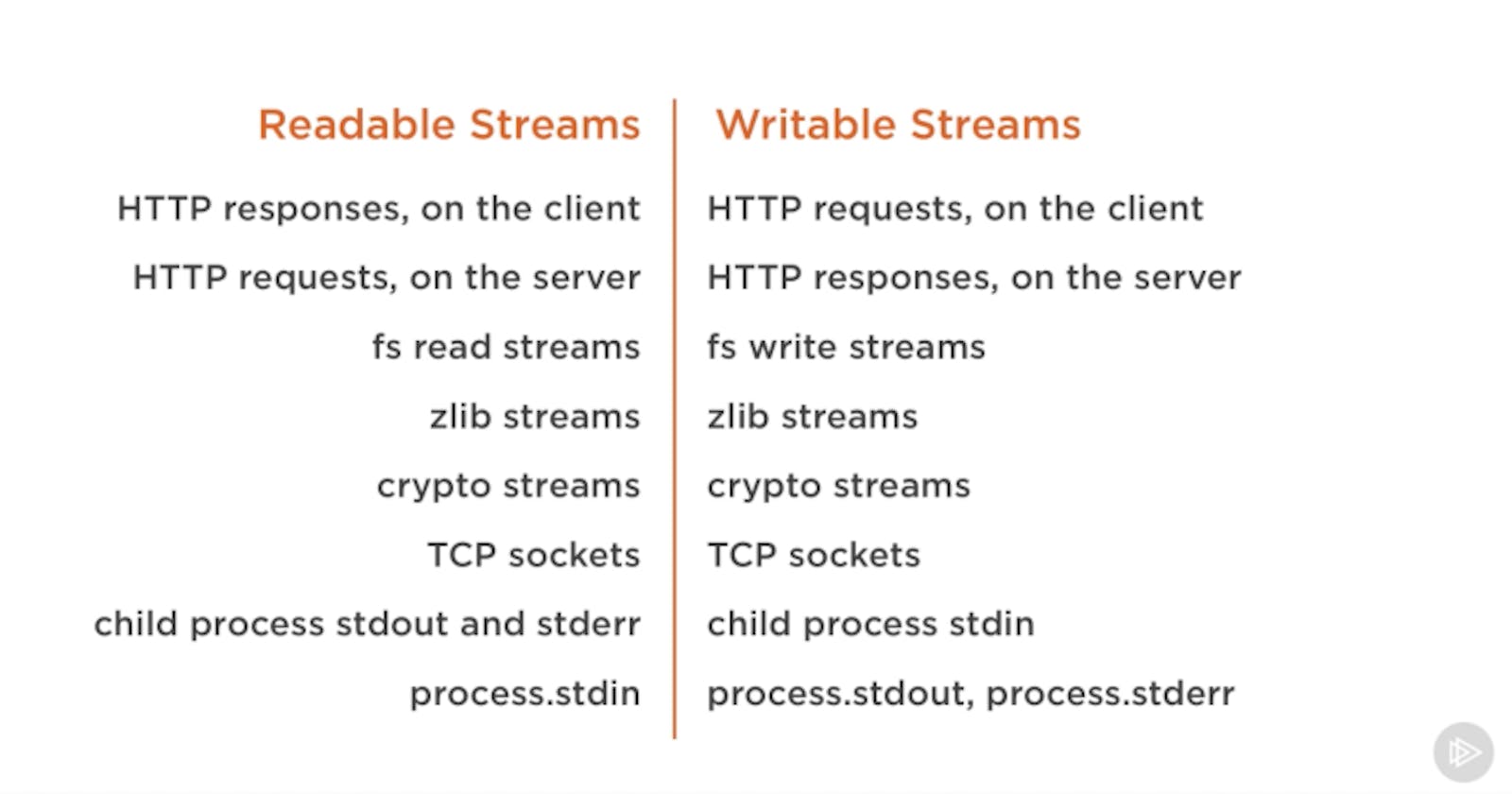

In Node.js, streams are implemented using the stream module. There are several different types of streams, including readable streams, writable streams, and duplex streams.

A readable stream, such as a file stream, can be used to read data from a source. An example of reading from a file using a readable stream is:

Copy codeconst fs = require('fs');

const stream = fs.createReadStream('largefile.txt');

stream.on('data', (chunk) => {

// process the data in chunk

});

stream.on('end', () => {

console.log('Finished reading the file');

});

A writable stream, such as a file stream, can be used to write data to a destination. An example of writing to a file using a writable stream is:

Copy codeconst fs = require('fs');

const stream = fs.createWriteStream('largefile.txt');

stream.write('Hello World');

stream.end();

A duplex stream, such as a socket, can be used to read and write data to a source and destination. An example of using a duplex stream is:

Copy codeconst net = require('net');

const stream = net.createConnection({

port: 80

});

stream.on('data', (chunk) => {

console.log(chunk.toString());

});

stream.write('GET / HTTP/1.1\r\n\r\n');

In conclusion, streams are a powerful feature in Node.js that allows developers to efficiently handle large amounts of data by reading and writing data in chunks. This allows for more efficient handling of data, such as video and audio streaming, and can also be used to handle large files and data sets.

If you enjoy this topic, you will probably like my articles, tweets, and stuff. If you're wondering, check out my YouTube channel and don't forget to subscribe and follow since I'm offering programming and motivating tools and information to help you achieve your dreams.